Artificial intelligence (AI) is poised to revolutionize everything by bringing in an era of intelligent machines. One of the biggest selling points of AI is that it would improve accuracy and reduce errors.

The common quality measurement factors for a classifier are F1 score, Precision, and Recall. A system needs to produce minimal “False Positives “and “False Negatives” for good quality. These “False Positives “and “False Negatives” are termed as “Misclassification”.

Imagine the impact misclassification can have on industries. One of the most promising use cases of AI is probably in the healthcare space. For instance, in the case of lung cancer screening, studies found that AI was outperforming radiologists in image-based diagnosis but what if misclassification creeps in? Or take the case of retail for instance; Amazon Go stores are based on computer vision and deep learning algorithms - people walk into a store, pick up objects and leave. The identification, inventory, and checkout are all done by algorithms that identify who the shopper is and what they have picked up. Misclassification of these images can bill the wrong people or items, and mess up inventory, toppling the entire business model on its head.

Deep learning has been a black box and that makes dealing with this situation more complicated. Wipro has been working on opening up the deep learning network for making deep learning classification decisions more “explainable and transparent”. This has been done by opening up the layers of the neural network to understand the activations and LRP relevance at each layer. Can this open network throw light on possibility of misclassification?

With relevance and activations at each layer, there is a possibility that there is a pattern exhibited by the neural network in deciding a class. The question was, will there be fuzziness exhibited if there is a confusion in the class of a test input? And if so, is there a way to tap into this?

Just as the human mind exhibits fuzziness when it is uncertain about a decision, there is fuzziness in the relevance and activation patterns of neurons at different layers when there is a confusion regarding a decision. Experiments on text and images led to identification of these misclassification instances 60-70% of the time. However, this increases the number of “false positive” alerts – another area to focus on in this journey to make AI more production-ready. Still, an ability to predict misclassifications is a big step forward. Combined with transparency, this ability will go a long way in gaining human “trust” and accelerating acceptance of AI solutions.

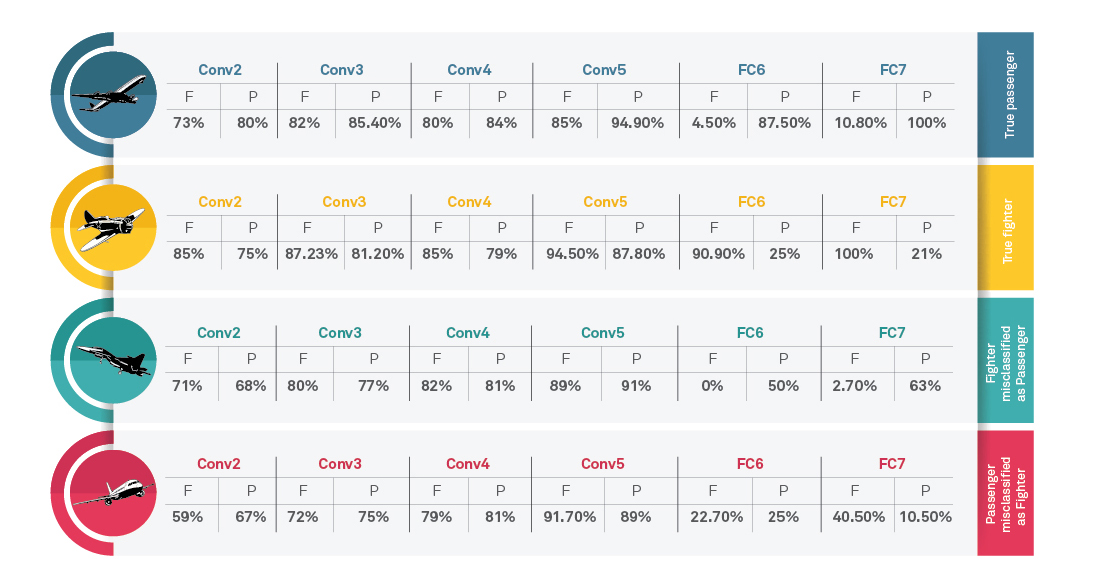

Below are some of the results that we have seen for a binary image classifier on Fighter (F) and Passenger (P) airplanes that we created:

*From neuron activations we can see a pattern that the percentage of neurons that get activated for a right classification are generally 100% and for during misclassification is generally lesser than 75 %, indicating a fuzziness or confusion and this can be alerted.

How have you dealt with AI misclassifications? What are your thoughts on the way forward?

Discuss with me @ Vinutha BN (CTO Office) <vinutha.narayanmurthy@wipro.com>

Industry :

Vinutha BN

CTO AI research

Vinutha heads CTO AI research and has 25 + years of industry experience. She is a firm believer that AI needs to be governed and controlled to ensure that it always in ' assist Human mode'