Introduction

The IT landscape of today is defined by two terms, “high velocity” and “consumer experience”. In the earlier white papers, data management in the digital realm - trends, challenges, and other constraints and data management in the digital realm breaking dawn-optimizing the usual, we saw the changing trends and challenges faced by IT departments. We also saw the solutions and hygiene that IT must put in place to counter basic challenges and also enable an organization to survive in today’s hypercompetitive environment. The proliferation and adoption of application agnostic hybrid infrastructure platforms introduce many new challenges that were never seen before. Hence, looking at advanced mechanisms that facilitate an organization to pursue agile roadmaps will be a key differentiator. These mechanisms are expected to manage and protect data, provide fuel for strategies, and handle heterogeneity, thereby driving innovation within the boundaries of reducing and leveling budgets.

Advanced mechanisms

Backup and data protection have refined themselves to meet the demands of the usual workloads but are they enough to meet the expectations of modern IT?

The basic question that we need to address is

Organizations cannot undermine the importance of big data protection and management. Their goal to achieve big data protection and management is facilitated by a few data management software solutions that integrate with common big data platforms. This integration delivers deep insights on the environment and facilitates varied and practical plans of data protection and recovery. Using intelligent approaches provided by big data protection and management vendors and a combination of technologies, big data environment can be extended to the Cloud. This extension enables recovering a big data environment in a public Cloud infrastructure to ensure business continuity and flexibility both during disaster recovery testing or an actual event. Thus, organizations achieve a big data environment that is protected & disaster averse. With the proliferation of PaaS and SaaS methodologies, organizations are given new ways of utilizing the cloud: primary source for hosting applications. Knowing this, we need to think about a few aspects.

In reality, very, few SaaS providers offer data recovery but at a heavy price, complex terms and conditions. Additionally, the process of initiation itself takes weeks. In today’s world where JIT concepts rule, such timelines are hardly acceptable. The truth is, these activities must or at least should remain the responsibility of each and every organization, regardless of how data is managed, accessed, or stored. Protecting and managing application and data present in the Cloud is just as doable as that for an on-premise scenario. Just like we keep multiple copies of the same data, some on-premise and off-premise likewise we keep multiple copies in the Cloud, either the same Cloud or other Clouds. Another approach for applications running in the Cloud will be to use Cloud file sharing technology with links pointing to a backup server on premise. This helps bring elements of governance, e-discovery, and compliance with the data landscape. Now that we have seen that solutions and methodologies are available to perform efficient backups, organizations will have to find solutions around challenges surrounding methodologies of locating and accessing protected data. The next set of questions which need to be pondered upon are

The solution should be such that it ensures data is stored securely in a single, indexed, de-duplicated, storage agnostic virtual repository to enable recovery, retention, access, and search. In addition, it should be intuitive enough to facilitate employees in performing basic restorations with minimal training. With end-users empowered IT admins no longer have to be part of each and every recovery and restore work.

With data management in control, organizations should think around the following

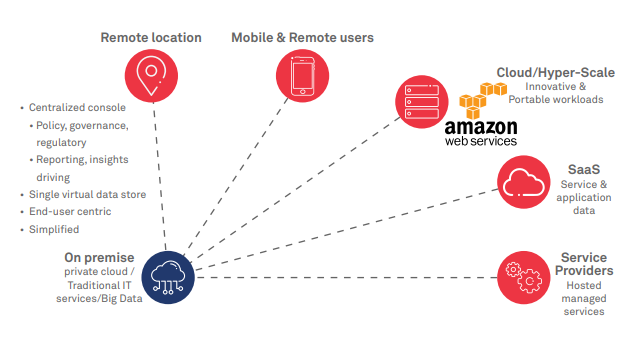

The solution architecture should be planned and implemented with the capability of backing data from various sources such as end-user devices, databases, physical servers, virtual servers, and cloud while meeting varied protection expectations.

Figure1 : Any data-any source-any target-anywhere

Let us consider an example; files and emails are better being archived & databases backed up. Multiple approaches bring complexities and hence consolidating actions is better. A solution that is deeply integrated with applications and hypervisor, ensures data recoveries are uncorrupted and application aware, enables a single operation to perform backup, archive and reporting will be the most sought after as implementing such a solution will result in reduction in complexity of operations, protection time, infrastructure costs and better administration.

We saw above that the software in the solution must have robust functionalities and features that facilitate multiple benefits. But what about the hardware that needs to complement the software. The benefits can be delivered only when the software and hardware are in sync and behave as a single system. Let us question ourselves.

Organizations have to focus on software-defined infrastructure solutions that not only provide strong vendor support and services, skill advancement, tightly integrates in an API economy but also contain costs and complement the data management software. Considering another way round, employing software-defined storage as a backup target will bring flexibility and reduce infrastructure costs. Software-defined storage solutions minimize issues like vendor lock-in, especially for hardware procurements from their storage suppliers. According to markets and markets, the software-defined storage market was worth $4.72 billion in 2016, and it could increase to $22.56 billion by 2021. That's a 36.7% compound annual growth rate.¹ Organizations have to harness heterogeneity. Heterogeneity enables capitalizing strengths of individual components be it software or hardware, speeds up returns & improves TCO. However, heterogeneity also invites complexities.

To sum up

The solution architecture explained here facilitates data protection and management at a pace that matches high-velocity data growth. It is capable of handling and managing complexities associated with heterogeneous data sources, data types and data targets, and innovative platforms such as PaaS and SaaS. It enables efficiencies at multiple levels, ensures data availability and restorability, facilitates monitoring, customizations, and centralization of control through a single pane of glass thereby facilitating monitoring and governance In addition, the solution is programmable and provides necessary touch points that allow seamless integration with intelligent software-defined targets, near-automated deployments, and operations, thereby delivering simplified and rich end user experience.

Sauradeep Das

Senior Manager, Cloud Infrastructure Services Wipro Ltd.

He is a seasoned IT professional with 12 years of industry experience specialized in infrastructure and telecom domains. He has vast experience and played versatile roles during his career including sales, presales, and practice and business development. Currently he is part of the SDx team as a solution architect and is responsible for SDx presales, practice development and delivery enablement. Sauradeep holds a Bachelor degree in engineering with masters in management. He is passionate about innovations and adoption of new technologies. He is reachable at sauradeep.das@wipro.com